Artificial Intelligence (AI) refers to the ability of machines to perform tasks that normally require human intelligence – for example, recognizing patterns, learning from experience, drawing conclusions, making predictions, or taking action – whether digitally or as the smart software behind autonomous physical systems.

The Air Force Research Laboratory, in partnership with IBM, unveiled the world’s largest neuromorphic digital synaptic super computer July 19, 2019, dubbed Blue Raven.

Today, challenges exist in the mobile and autonomous realms due to the limiting factors of size, weight, and power, of computing devices – commonly referred to as SWaP. The experimental Blue Raven, with its end-to-end IBM TrueNorth ecosystem will aim to improve on the state-of-the-art by delivering the equivalent of 64 million neurons and 16 billion synapses of processing power while only consuming 40 watts – equivalent to a household light bulb.

Beyond the orders of magnitude improvement in efficiency, researchers believe that the brain inspired neural network approach to computing will be far more efficient for pattern recognition and integrated sensory processing than systems powered by conventional chips. AFRL is currently investigating applications for the technology.

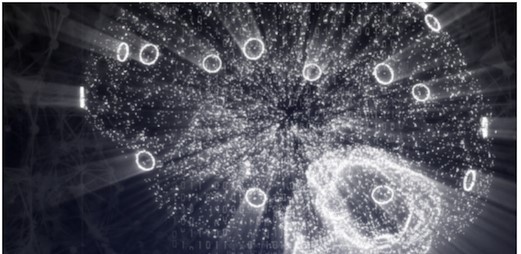

AFRL is developing Blue Raven, a neuromophic digital synaptic computer with 64 million neurons and 16 billion synapses. This means each neuron can support approximately 256 synapses per neuron. A neuron based design starts with a brain-like neuron where dendrites interconnect as synapses among brain cells. Most are weighted-synapse inputs that can be positive or negative values for a particular neuron. Loops are wave-like functions of a human brain; Alpha, beta, gamma, etc. Brainwaves act as internal-clock-like-loops for groups of neurons. Brain-like decoding can process just the active synapses, for example. The human brain consumes approximately 12 to 25 watts of power, depending on knowledge type, whereas a 40 watt consumption for the AFRL artificial brain is approximately 60% to 80% less efficient than the real thing. Neuromophic implies the ability to adapt to certain types of problems, and literally create a knowledge database on the fly. Obviously, this is a higher level capability.

VisualSim can model synapses connected to a neuron with the VM block, where the weighted trigger might be stored in a large brain array of weights. During execution such weights might be found in the memory hierarchy. Layers of neurons, as used in a weighted-layer design would require either a training period, or adopt a proven-pre-configured set of weights. Once running, then different applications might utilize this approach; and needs to be investigated, especially pattern recognition. A neuromophic feature is the next level in harnessing AI as a knowledge database; and more difficult to integrate among all brain-like decision making.